The patent does not specify smartphones or any other specific form factor the device is referred to generally in the patent as an apparatus. Several AUs combine to form a string that's detected and matched to an emotion label that best fits the string.

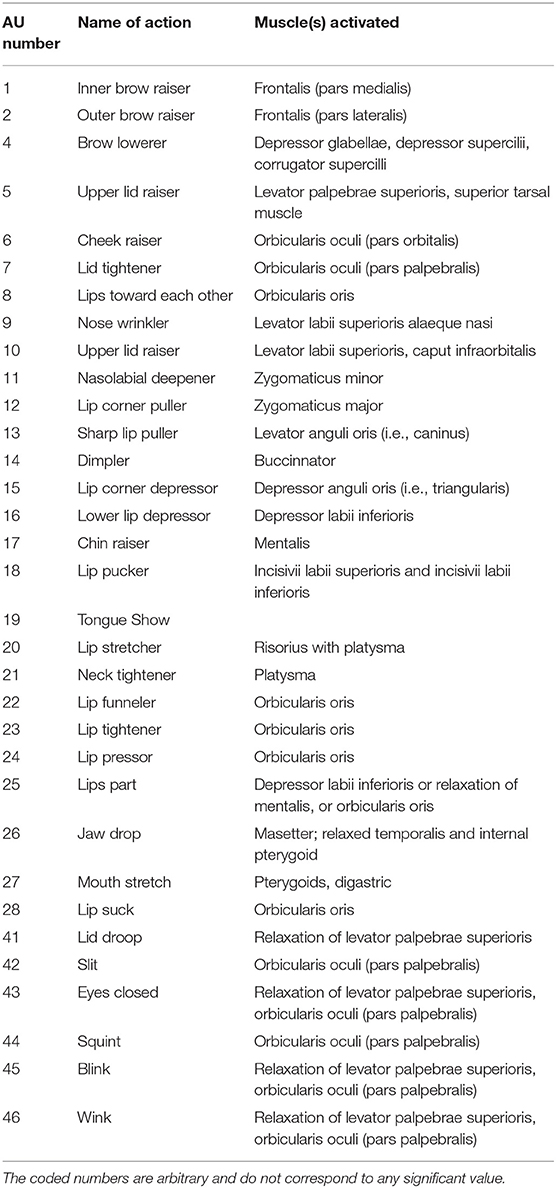

If you take the final test and pass, you can receive the document.The AUs are components of a facial-action coding system. Only people who know FACS as a comprehensive system can correctly apply it on a selective basis. This is based on the wishes of the authors of EMFACS - Paul Ekman and Wallace Friesen, and it makes good sense. The reason is that we have to make sure people have mastery of FACS before applying rules to use FACS selectively in this way.

The EMFACS instructions are only available people who have passed the FACS final test. Bear in mind, EMFACS coding still yields FACS codes, so the data have to be interpreted into emotion categories.ĮMFACS is only a set of instructions on how to selectively FACS code in this way. The drawback is that it can be harder to get intercoder agreement on EMFACS coding as the coders have to agree on two things: 1) whether to code an event (a result of their online scanning of the video for the core combinations) and 2) how to code those events that they have chosen to code. EMFACS saves time as one is not coding everything. The coders only codes the events in a video record that contains such core combinations - they use FACS coding to score those events, but they are not coding everything on the video. To do this, the coder scans the video for core combinations of events that have been found to suggest certain emotions (much like the prototypes in Table 10-2). EMFACS (Emotion FACS) is a selective application of FACS scoring, in which the coders only scores behavior that is likely to have emotional significance.

0 kommentar(er)

0 kommentar(er)